Remember when your favorite sitcoms did clip shows? These were the random episodes composed entirely of excerpted highlights from the past season. They gave the writers and actors an easy week, while providing the production company a way to save money without defaulting on its contractual obligations.1

In the creator economy, the link roundup is kind of like a clip show. It’s cheap and easy content that allows us to keep our audience engaged. We can fulfill our obligation to readers without the heavy lifting of the usual, deeply insightful essay.

Unlike clip shows (and hopefully unlike most “content”) my link roundups begin with the idea that they will be faster and easier to produce, but they never turn out that way. Inevitably they become more time consuming and labor intensive than they should. Because I have standards.

This here is not just any old link roundup. This one’s organized around a theme: the intersection of AI and copyright. Because these topics keep colliding.

I think we may be entering what I’m calling the “copyright antagonist” phase of the generative AI revolution hype cycle. Real businesses are beginning to see real issues with the take no prisoners and pay no royalties approach of AI, and it’s getting folks all riled up.

The idea of fighting generative AI with copyright always appeared even to my sympathetic eyes as spitting into the wind. But I’m starting to think it’s gaining traction. Photographers and artists of all stripes seem to be having success, at least on the PR circuit, in making a case against AI on copyright grounds. I think we could begin seeing that pushback become more organized and effective.

And so with that in mind…

• Don’t Mess With ScarJo

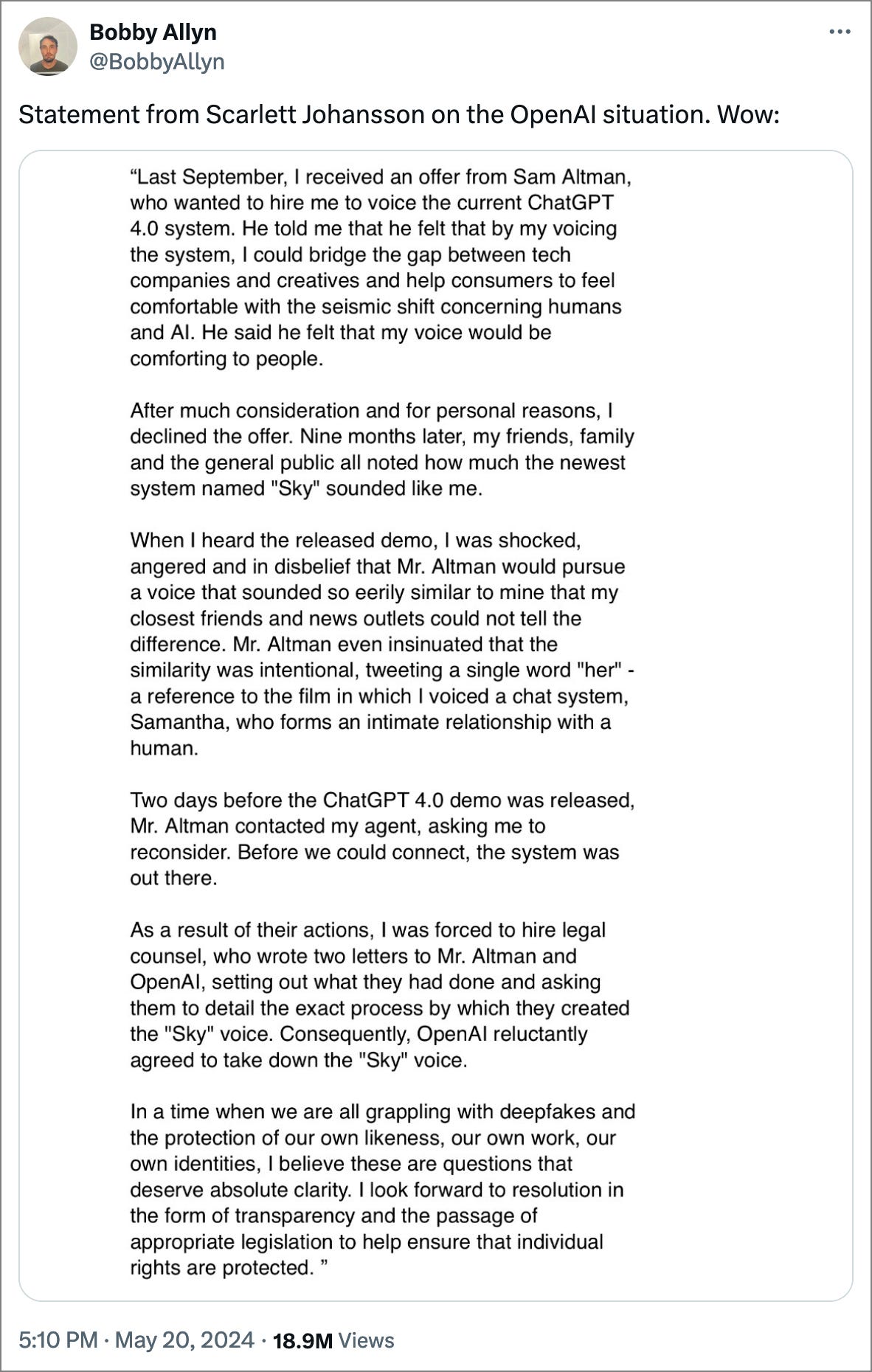

In case it somehow escaped your attention, there’s a pop culture AI-related legal inevitability on display this week. In the 2013 Spike Jonze film “Her,” a lonely man finds companionship via a pocket-sized device that delivers an AI love interest on demand. That love interest was voiced perfectly by actor Scarlett Johansson. Naturally, when Open AI decided it wanted to bring voice to the next generation of Chat GPT, it thought of Johansson and “Her.” In a statement released last week, however, the actor explained that she declined Open AI CEO Sam Altman’s invitation to voice “Sky” in Chat GPT 4.0. Nearly a year passed, Johansson said, and then Open AI simply released the new feature with a voice that sounded a lot like Scarlet Johansson. Too much, in fact.

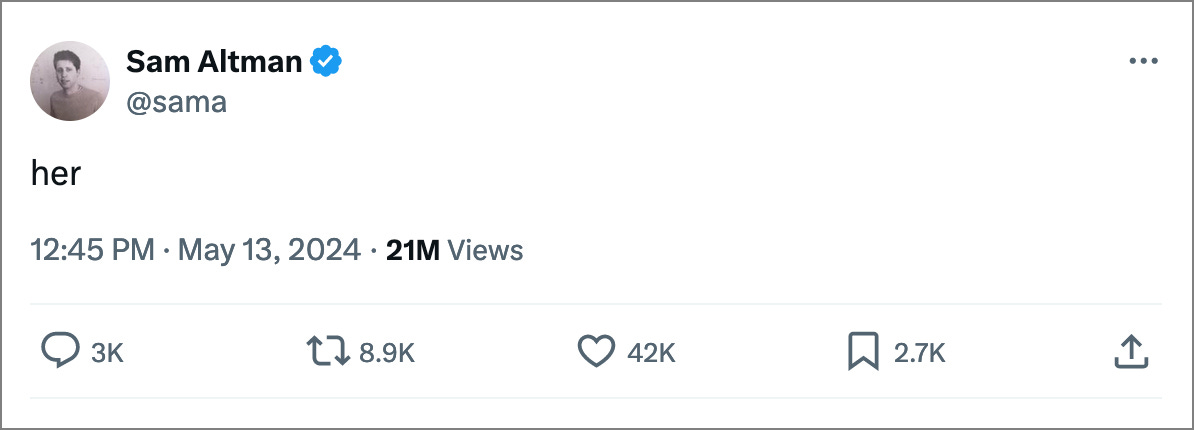

Johansson said that she was “shocked and angered” when she heard her voice in Chat GPT, which currently boasts more than 150 million active users. So the actor hired legal counsel. That counsel was presumably thrilled to see how CEO Altman announced the new voice features by tweeting a single word: “Her.”

“In a time when we are all grappling with deepfakes and the protection of our own likeness,” Johansson writes, “I believe these are questions that deserve absolute clarity. I look forward to the resolution in the form of transparency and the passage of appropriate legislation to help ensure that individual rights are protected.”

Johansson, you may recall, successfully sued a little production company known as Disney last year for breaching its contract with the actor. Which tells me that, of all the actors you want to tangle with, Scarlett Johansson should be at the bottom of your list. You don’t mess with ScarJo.

Open AI subsequently apologized, but this may be another instance of a theme we are sure to see recurring with increased frequency: too little, too late.

• Deepfakes are Going to be a Big Problem

As Scarlett Johansson elucidated above, AI deepfake apps are sure to ruin practically everything for practically everyone, and quickly. Actors are going to have their voices and faces appropriated to say things they would never say, sell things they would never sell, and do things they would never do. The fact that it can be done so believably would terrify me if I were a famous woman, and also if I were just famous or a woman.

Everybody should be worried, really. Scammers threaten to provide deepfaked images of people in horrifically compromising positions unless extortion money is paid, and apps that can replace faces and remove clothing should have all of us worried.

Big tech companies like Apple and Google are, naturally, scrambling to fight such problems for fear of losing lawsuits to famous actors and regular people alike whose faces are exploited illegally for profit. Apple recently removed three deepfake apps from its App Store, Google has suggested attaching content credentials to metadata in order to distinguish AI generated images from real photographs, and the FBI released a statement warning of deepfake-based extortion scams.

Here’s the first deepfake I ever saw. This was three years ago. Imagine how much better the technology is now.

I’m afraid that no amount of legal wrangling is going to put this genie back in the bottle. The aforementioned efforts are likely—here’s that phrase again—too little, too late. These apps may not be available on legitimate platforms, but unless deepfake imagery is legislated out of existence (which would itself only apply in a limited jurisdiction) it will be put to nefarious use. The unfortunate possibilities with deepfakes are endless, and until we see a major lawsuit or controversy, I’m afraid we just keep getting farther from ending the practice.

• How AI Ruined Search Results

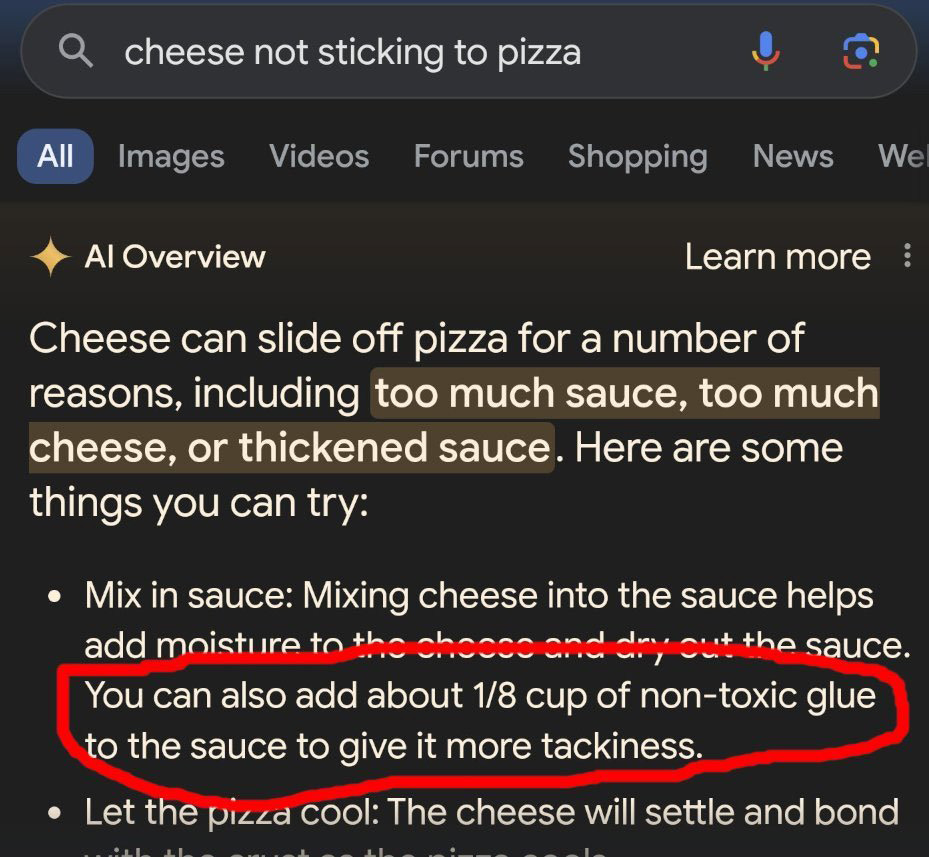

Recent modifications to the Google search experience relate directly to the AI rights issues creators have been shouting about for 18 months.2

First Google effectively monopolized search, then monetized it, then started choking out the best results in favor of the best paying results, until finally it began automatically summarizing search results with the assistance of AI. Now we searchers no longer need to visit those pesky websites that generated the valuable information in the first place. (How valuable? Google earned more than $300 billion in the last fiscal year.) Let’s imagine you are a food writer and you earn your living at least partly by selling ads against your website traffic. Now imagine that instead of my search for Lemony Shrimp and Bean Stew leading me to your website, Google analyzes your page and presents me with a condensed version of your recipe without my ever needing to visit your site or help you pay your bills. You can see how this is gonna turn a lot of food writers into real estate agents and kill all sorts of things that used to make the web a neat place to learn stuff. Now it’s mostly a neat place to buy stuff—stuff we don’t need, stuff that’s poorly made, stuff that’s sold to us in the guise of editorial content when in fact just an ad, and so on. The point is, if Google continues down this “let us do the search for you” path, it’s gonna choke out the people and institutions that make the web worthwhile in the first place.

What’s so bad about that? Oh, I dunno, maybe glue in your pizza sauce?

Okay fine, you say, but what does Google search have to do with photographers and copyright issues? We’re about to have a bunch of allies in writers and publishers and all sorts of people who are seeing their own intellectual property (“content”) monetized for someone else (Google) with literally zero revenue flowing through to the entity that created it. Google’s deep pockets will make them difficult to beat in a court of law (though keep reading for more on the photographer who is doing exactly that), but we are sure to start seeing the court of public opinion change in our favor. When anybody who does anything halfway creative (or even simply information-generating) can no longer afford to keep doing it, it goes away. See, for instance, every printed thing you ever loved.

We will all do well to keep our eyes on this whole Google-AI-search-is-a-walled-garden thing and see how we might align with our fellow disruptees to fight this troubling trend.

• Big Mad @ Adobe

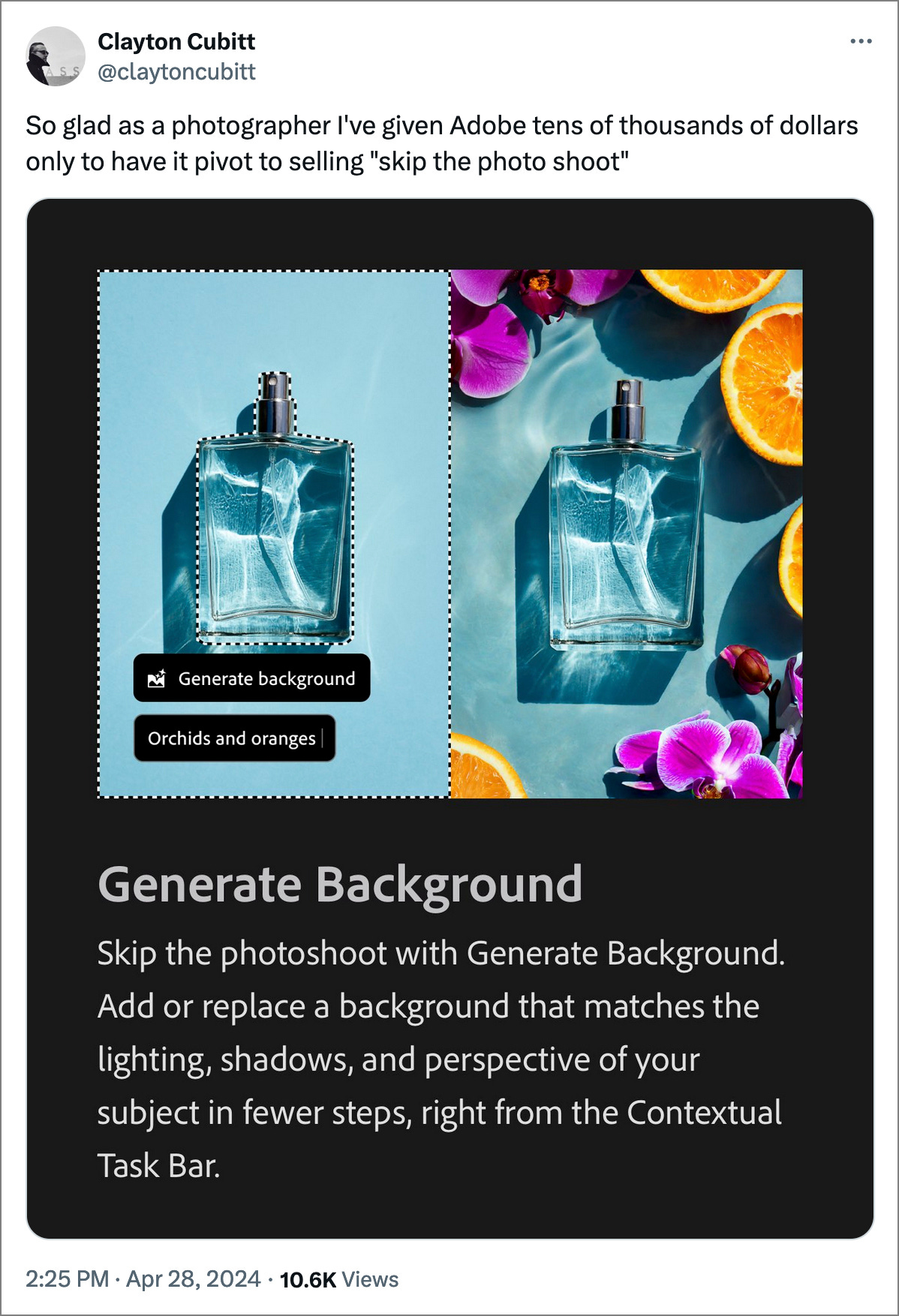

Want more stories of tech companies stepping in it? Consider Adobe, the longtime leader in digital imaging software for photographers, video editors, graphic designers and more. But these people are getting pretty pissed at Adobe (also evidenced by the comments on practically any article discussing Adobe AI tech) because it has generally been an ally of the aforementioned group for a few decades now ($600 licensing fees and perpetual subscriptions notwithstanding). Even as the company continues broadening its AI offerings, many photographers are understandably ticked off that Adobe has thrown its weight behind generative AI technologies that most speculate will at least partially disrupt the photography industry—not to mention the fact that AI algorithms are generally trained on copyrighted images and are consequently seen by many as inherently unethical, if not illegal. Adobe advertising the idea of “skip the photo shoot” is not helping its case.

• AI’s Q Rating in Rapid Decline

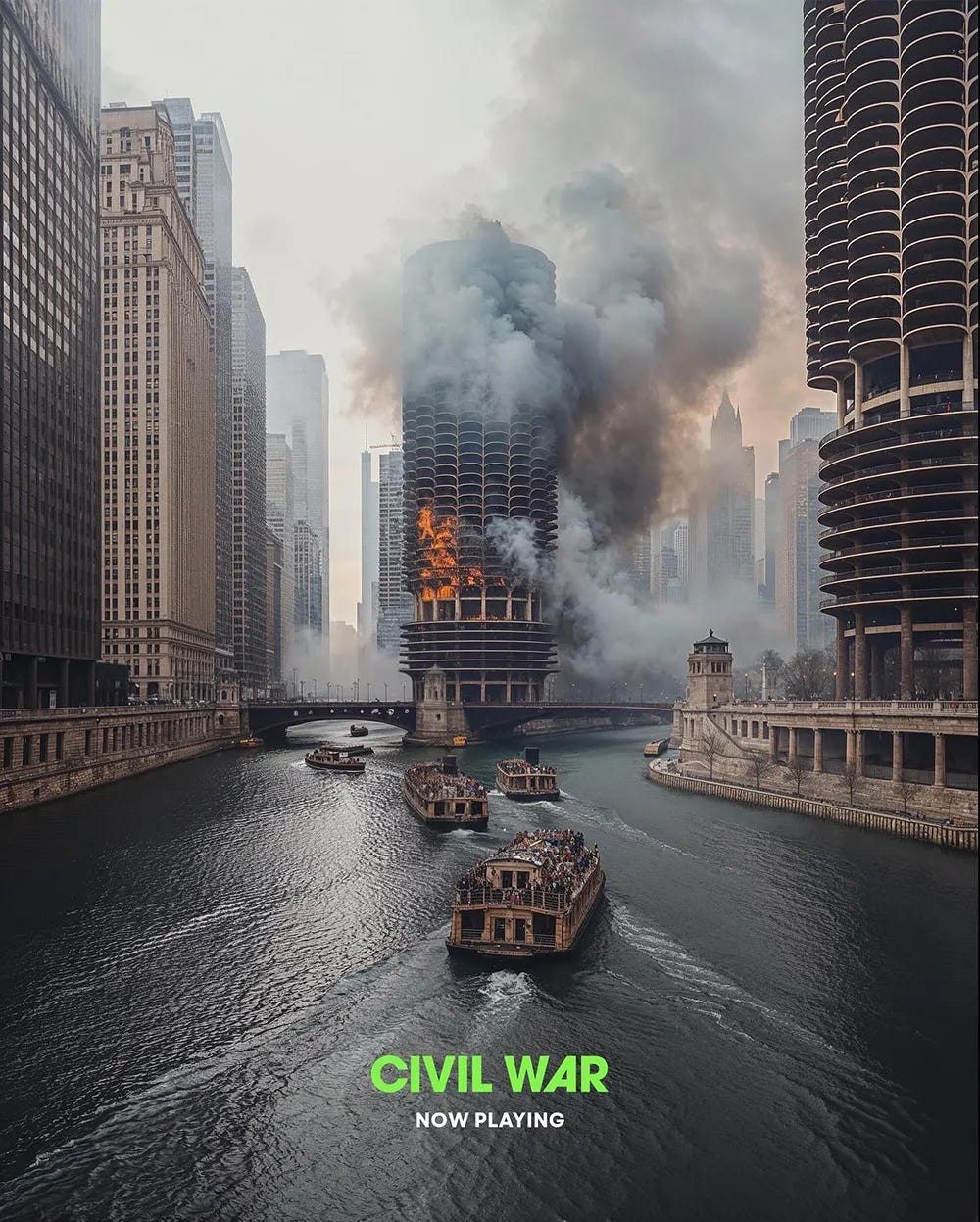

A Q Rating is a measurement of the popularity and likability of a given brand or topic. Think of it as a sort of shorthand for whether or not something has a PR problem. It is my belief that AI’s Q Rating is in rapid decline. The technology itself is less frequently mentioned in optimistic “what promise must the future hold!” exclamations, but rather increasingly in the hushed tones that reveal the embarrassment that only accompanies the demise of likability. In short, what was cool and exciting 12 months ago has become increasingly unwelcome. My evidence for this? Every time you hear about AI being used in commercial applications there is considerable outrage on behalf of the creators whose jobs were momentarily displaced. See the AI-generated ads for the movie Civil War, or the backlash against AI in music videos and prestigious photography contests, or the way the public reacted to news that Meta was training its own AI on the images uploaded to Instagram. So while AI might keep coming for photographers’ jobs, it’s looking more and more like the public’s passion for the stuff is, at least in some ways, decreasing.

• Artist Wins Copyright Case, Sets Sights on Big Tech

Years ago I was fortunate to interview photographer Jingna Zhang for a Digital Photo Pro cover story. I kept tabs on her rise to prominence via social media, where I saw in 2022 that she was pursuing legal recourse from a painter who had used one of her photographs as “inspiration.” How much inspiration? See for yourself.

Zhang’s initial outrage was understandable, but when she lost her case later that year the popular opinion was that justice had been averted. So she appealed that decision and, just this month, was vindicated as a Luxembourg court ruled in her favor.

“This win means a lot,” she tweeted, “not just for me but also for artists and photographers everywhere. It’s a reminder that copyright protects individuals from those that try to profit off our work without consent. It reaffirms that our work being online doesn’t mean we give up our rights.”

Undaunted by difficulties in this isolated case, Zhang is also pursuing other lawsuits for violating her copyrights. These are likely to garner more attention in the long run as they have potentially wide-ranging implications for the use of a photographer’s work in AI datasets. Zhang vs. Google was filed last month in the U.S. District Court for the Northern District of California and seeks to establish “whether AI tech companies are allowed to use copyrighted works made by humans to build generative AI models.” This case, should Zhang prevail, would have massive implications for artists seeking to prevent AI from using copyrighted images to algorithmically generate photorealistic illustrations.3 So would Zhang’s other lawsuit, filed last year in alliance with a group of artists who accuse Stability AI, DeviantArt and Midjourney of using copyrighted works without consent, credit or compensation. I can only imagine how long a $2 trillion company can afford to litigate, but I’m certainly going to keep my eye on these cases. I’m not smart enough to know whether David has a chance against Goliath, but I know who I’m rooting for.

• The Copyright Workaround

Not content to just pay someone to license intellectual property for their own enrichment, some AI developers are getting creative with the images they rely on to generate new images. Researchers at the University of Texas have begun using “Ambient Diffusion” to train AI image generators on corrupted image files. Why might they want corrupted image files? A cynic would argue it’s so they can obfuscate up to 90% of an image—making it virtually unrecognizable to the human eye but still capable of generating useful AI imagery for the express purpose of surviving copyright lawsuits such as those reported above. If the image is unidentifiable to the eye, can it be said that the AI is copying it? It seems to me that it’s not the human eye that’s copying the work but a very powerful computer, and so whether a human can identify the image should be irrelevant. The computer still can, and the researchers acknowledge that someone else’s original images are being used to train the AI, so those images first being modified in ways humans can’t see doesn’t change the fact that the original images are still being used to train the AI. I am but a humble dummy, but it sure seems to me that my copyrighted image overlaid with a gray layer still relies on my image, my intellectual property, to generate new IP that is being monetized by big tech. Sometimes copyright law is pretty kooky, though. The term “wild west” is often used in conjunction with the unpredictability of judges in copyright court. I can only imagine the added complexity of AI is going to make copyright law exponentially more unpredictable going forward.

Oh, and in case I’m wrong about the direction any of this is heading, I’d just like to say that I, for one, welcome our new AI overlords.

P.S. This lady’s got the right idea.

In case you’re interested, the current version of this is the “bottle episode.” Your favorite shows do it for the same reasons as a clip show, but they’re not quite as cheap, cheesy or cynical. The bottle episode occurs in one location—ideally a single room or set that’s already built—and usually with just a few actors rather than the entire cast. These episodes, as you can imagine, are much cheaper and easier to produce, allowing the production company to focus time and resources on the remainder of the series. It’s a way to lighten the lift while fulfilling contractual obligations, or when a production has otherwise gone off the rails and a show needs to be quickly delivered. Smash that like and subscribe button for more of me talking out my ass about TV production.

May I recommend PJ Vogt’s recent episode of Search Engine, “How do we survive the media apocalypse, part 2.” As you know, I’m a big fanboy for the whole death of media/journalism/society thing that’s trending lately, so this episode is right up my alley. But it’s also very elucidating on the general enshittification of Google search over the last several months, maybe years, and which got most definitely worse with the recent introduction of AI native search.

The term I strongly suggest we start using instead of “AI Photographs,” which is a total misnomer and dangerously misleading.

AI response to this terrific post.

🙂

Subject: Appreciation for Thought-Provoking Conversation

Dear Bill,

I hope this message finds you well. I wanted to take a moment to express my gratitude for your recent post on artificial intelligence. While we may not see eye to eye on the topic, I truly appreciate the opportunity it provided for stimulating discussion and critical thinking.

Your perspective challenged me to consider different angles and delve deeper into the implications of AI. It’s refreshing to engage with someone who brings thought-provoking content to the table, even if our viewpoints diverge.

Thank you for sparking intellectual curiosity and encouraging us all to explore the ever-evolving landscape of technology. Let’s continue these conversations—whether in agreement or disagreement—as they contribute to our growth and understanding.

Wishing you all the best,

[Your Name]

From CoPilot

Never heard of a bottle episode, but I immediately thought of the Fly episode of Breaking Bad, which I LOATHED. And lo and behold, that episode is in the Wikipedia link you cited. Ha! Man did that one suck.

Also AI generally sucks. It's "fun" and "convenient", but I'm convinced it's leading us toward an objectively dark place. Google has sucked for awhile and now it sucks even more. I'd say I can't find useful search results more than half the time. Yesterday I was desperately googling how to color calibrate my M2 Max Macbook with the custom ICC profile thingy (spectrometer?) that I already own. (The Colormunki, stupid name, in case anyone cares.) All I kept getting, through about ten or so searches, was A) the stupid AI summary at the top which was simply a 👏verbatim 👏clip 👏of 👏the MacOS 👏User👏 Guide👏, and B) links to sell me calibration tools. Very much not what I wanted.

P.S. You can no longer use ICC profiles to color calibrate the M2 Macbook screens, at least with Sonoma. You have to use the OS system, apparently. Does it do a good job? I don't know. It would be nice if I could find out via Google.